LargeSpatialModel: End-to-end Unposed Images to Semantic 3D

5UCLA, 6GaTech, 7Stanford University, 8USC

NeurIPS 2024

Demo

Abstract

Reconstructing and understanding 3D structures from a limited number of im- ages is a classical problem in computer vision. Traditional approaches typically decompose this task into multiple subtasks, involving several stages of complex mappings between different data representations. For example, dense reconstruc- tion using Structure-from-Motion (SfM) requires transforming images into key points, optimizing camera parameters, and estimating structures. Following this, accurate sparse reconstructions are necessary for further dense modeling, which is then input into task-specific neural networks. This multi-stage paradigm leads to significant processing times and engineering complexity

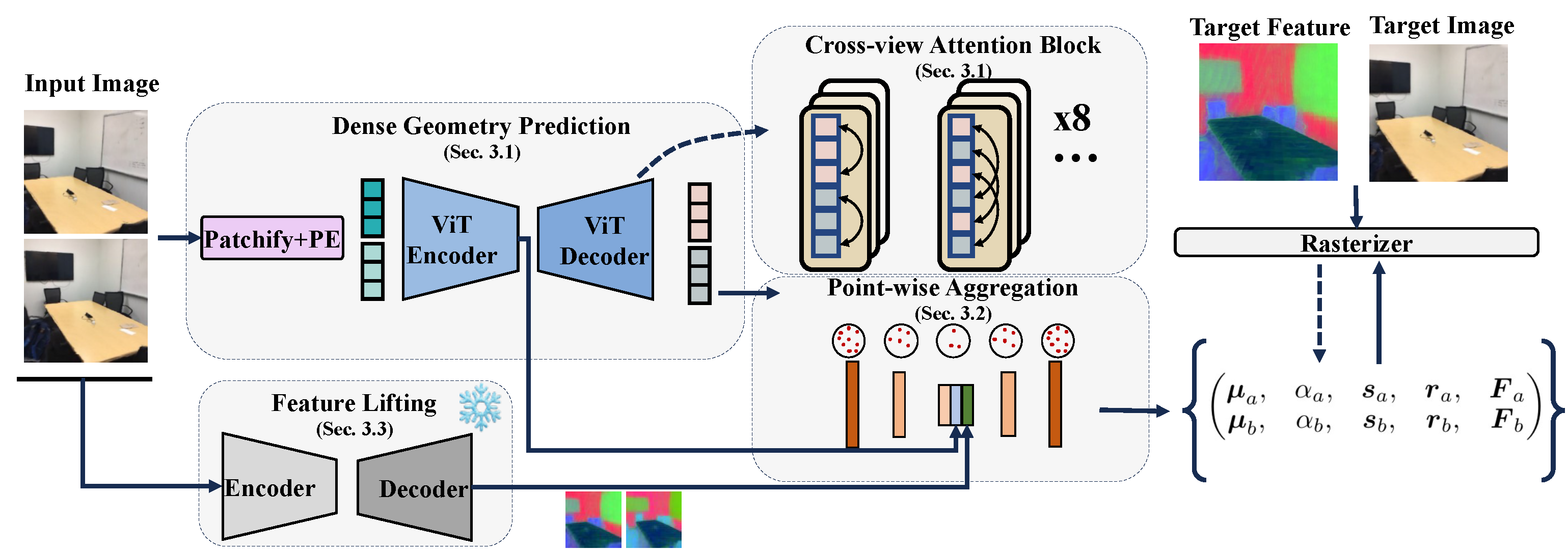

In this work, we introduce the Large Spatial Model (LSM), which directly pro- cesses unposed RGB images into semantic radiance fields. LSM simultaneously estimates geometry, appearance, and semantics in a single feed-forward pass and can synthesize versatile label maps by interacting through language at novel views. Built on a general Transformer-based framework, LSM integrates global geometry via pixel-aligned point maps. To improve spatial attribute regression, we adopt local context aggregation with multi-scale fusion, enhancing the accuracy of fine local details. To address the scarcity of labeled 3D semantic data and enable natural language-driven scene manipulation, we incorporate a pre-trained 2D language- based segmentation model into a 3D-consistent semantic feature field. An efficient decoder parameterizes a set of semantic anisotropic Gaussians, allowing supervised end-to-end learning. Comprehensive experiments on various tasks demonstrate that LSM unifies multiple 3D vision tasks directly from unposed images, achieving real-time semantic 3D reconstruction for the first time.

Method Overview

Our method utilizes input images from which pixel-aligned point maps are regressed using a generic Transformer. Point-based scene parameters are then predicted employing another Transformer that facilitates local context aggregation and hierarchical fusion. The model elevate 2D pre-trained feature to facilate consistent 3D feature field. It is supervised end-to-end, minimizing the loss function through comparisons against ground truth and rasterized feature maps on new views. During the inference stage, our approach is capable of predicting the scene representation without requiring camera parameters, enabling real-time semantic 3D reconstruction.

Scalable Visualized Results

BibTeX

@misc{fan2024largespatialmodelendtoend,

title={Large Spatial Model: End-to-end Unposed Images to Semantic 3D},

author={Zhiwen Fan and Jian Zhang and Wenyan Cong and Peihao Wang and Renjie Li and Kairun Wen and Shijie Zhou and Achuta Kadambi and Zhangyang Wang and Danfei Xu and Boris Ivanovic and Marco Pavone and Yue Wang},

year={2024},

eprint={2410.18956},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2410.18956},

}